Python - Automatic Type Hinting With Monkeytype

I’ve been poking around with adding type hints to Python code automatically. Here’s my process so far:

I’ve been poking around with adding type hints to Python code automatically. Here’s my process so far:

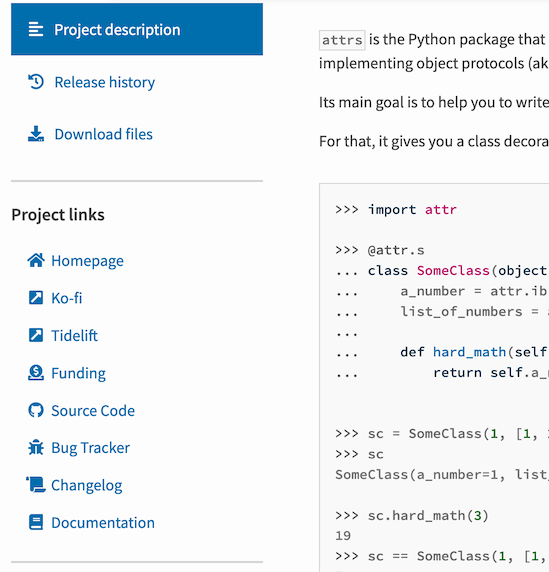

I saw the attrs project has a link to their changelog on pypi:

It turns out that’s controlled by the project_urls section of the setup.py.

More details: Python Docs

I recently saw a Dockerfile based on the official docker Debian image that was installing dependencies into a virtualenv.

I’m pretty sure using a virtualenv in an official Debian-based Dockerfile is unnecessary, because there’s no system Python to isolate from:

$ docker run -it debian /bin/bash

root@21ca17310079:/# python

bash: python: command not found

I learned about the “git pickaxe” this week, and I used it to find the first commit with a line of code that had been moved between a few different files.

More details: https://remireuvekamp.nl/blog/the-git-pickaxe.html

I learned about apt-get install’s “–no-install-recommends” flag, and I used it to prevent unnecessary “recommended” packages from getting installed. This helped reduce some unnecesary bloat in a Docker image.

More details: https://ubuntu.com/blog/we-reduced-our-docker-images-by-60-with-no-install-recommends

Today I learned about the amazing WLED project: https://github.com/Aircoookie/WLED

All you need to do is flash their binary to an ESP32 and it will bring up a WIFI access point that allows you to control the LEDs through an app or web page.

I really liked this talk by Martin Fowler about Event Driven Architecture: https://youtu.be/STKCRSUsyP0

It’s interesting to think about Git as an example of event sourcing.

I’ve been learning more about async communication between microservices and came across this article that talks about some of the trade-offs between sync and async communication: https://dzone.com/articles/patterns-for-microservices-sync-vs-async

My new job has me working on a larger codebase than previous jobs, and it’s also my first time using mypy.

I’m starting to understand why Guido’s work on mypy had a lot to do with Dropbox’s Python 3 migration. Dropbox wrote an article with details on how they used mypy on their “4 million lines of Python”. With that much code, I can understand why they needed to treat code like “cattle, not pets”.

Python 3 disallows some comparisons with None types, makes huge changes to strings/bytes, and has many other changes involving types. When your codebase is massive, you have to reach for automated tooling to consistently find and prevent those bugs. With the same tooling you can also prevent entire categories of other bugs from reaching production. The type hints can also be helpful documentation. For those reasons and more, I think implementing type hints on a large codebase like Dropbox’s will definitely be worth it in the long run.

It has me wondering if I should have been taking the extra time to use type hints and checking (or a statically typed language) this whole time. Was going without type hints one less distraction? Or will be the price be paid in maintenance difficulties and bug fixes later?

At this point, not much of the Python ecosystem has type hints (not even Python 2 compatible comment-style type hints). I’m starting to think it would be a good use of time to work on changing that.

I migrated this blog from blogger/blogspot to a static site generated with Jekyll and hosted with Netlify.

This blog post describes the process: http://joshualande.com/jekyll-github-pages-poole

Initially, I started using Python’s Pelican, but pelican-import (pelican’s tool for migrating from blogger) doesn’t work as well as jekyll-import. It turned comments into posts and threw exceptions while processing draft posts without content. Also, the first docs that show up on google for Pelican aren’t the latest docs. This causing issues when I followed the old docs that said to use Python 2.7, but Pelican only supports Python 3.6+ now.